Do those live chat tools actually help your business? Will they get you more customers by allowing your visitors to chat directly with your team?

Like most tests, you can come up with theories that sound great for both sides.

Pro Live Chat Theory: Having a live chat tool helps people answer questions faster, see the value of your product, and will lead to more signups when people see how willing you are to help them.

Anti Live Chat Theory: It’s one more element on your site that will distract people from your primary CTAs so conversions will drop when you add it to your site.

These aren’t the only theories either, we could come up with dozens on both sides.

But which is it? Do signups go up or down when you put a live chat tool on the marketing site of your SaaS app?

It just so happens I ran this exact test while I was at Kissmetrics.

How We Set Up the Live Chat Tool Test

Before we ran the test, we already had Olark running on our pricing page. The Sales team requested it and we launched without running it through an A/B test. Anecdotally, it seemed helpful. An occasional high-quality lead would come through and it would help our SDR team disqualify poor leads faster.

Around September 2014, the Sales team started pushing to have Olark across our entire marketing site. Since I had taken ownership of signups, our marketing site, and our A/B tests, I pushed back. We weren’t just going to launch it, it needed to go through an A/B test first. I was pro-Olark at this point but wanted to make sure we weren’t cannibalizing our funnel by accident.

We got it slotted for an A/B test in Oct 2014 and decided to test it on 3 core pages of our marketing site: our Features, Customers, and Pricing pages.

Our control didn’t have Olark running at all. This means that we stripped it from our pricing page for the control. Only the variant would have Olark on any pages.

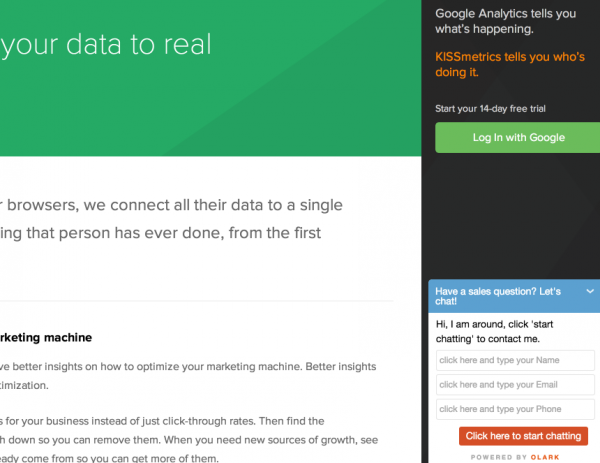

Here’s what our Olark popup looked like during business hours:

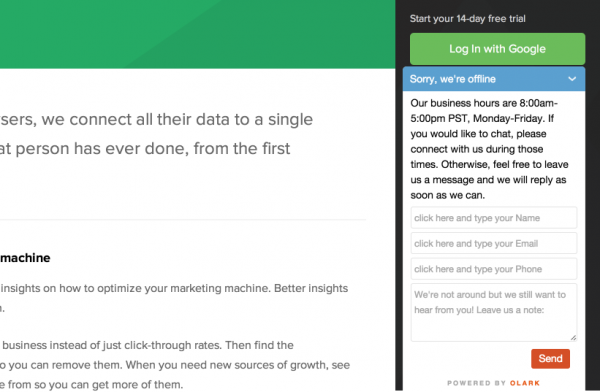

And here it is after-hours:

Looking at the popups now, I wish and I done a once-over with the copy. It’s pretty bland and generic. That might have gotten us better results. At the time, I decided to test whatever Sales wanted since this test was coming from them.

Setting up the A/B test was pretty simple. We used an internal tool to split visitors into variants randomly (this is how we ran most of our A/B tests at Kissmetrics). Half our visitors randomly got Olark, the other half never saw it. Then we tagged each group with Kissmetrics properties and used our own Kissmetrics A/B Test Report to see how conversions changed in our funnel.

So how did the data play out anyway?

Not great.

Our Live Chat A/B Test Results

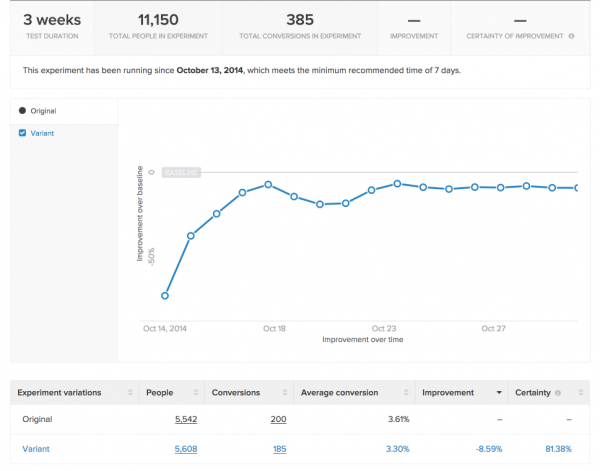

Here’s what Olark did to our signups:

A decrease of 8.59% at 81.38% statistical significance. I can’t say that we have a confirmed loser at this point. I prefer 99% statistical significance for those kinds of claims. But that data is not trending towards a winner.

How about activations? Did it improve signup quality and get more people to install Kissmetrics? That step of the funnel looked even worse:

A 22.14% decrease on activations at 97.32% statistical significance. Most marketers would declare this as a confirmed loser since we hit the 95% statistical significance threshold. Even if you push for 99% statistical significance, the results are not looking good at this point.

What about customers? Maybe it increased the total number of new customers somehow? I can’t share that data but the test was inconclusive that far down the funnel.

The Decision – Derailed by Internal Politics

So here’s what we know:

- Olark might decrease signups by a small amount.

- Olark is probably decreasing Kissmetrics installs.

- The impact on customer counts is unknown.

Seems like a pretty straightforward decision right? We’re looking at possible hits on signups and activations, then a complete roll of the dice on customers. These aren’t the kind of odds I like to play with. Downside at the top of the funnel with a slim chance of success at the bottom. We should of taken it down right?

Unfortunately, that’s not what happened.

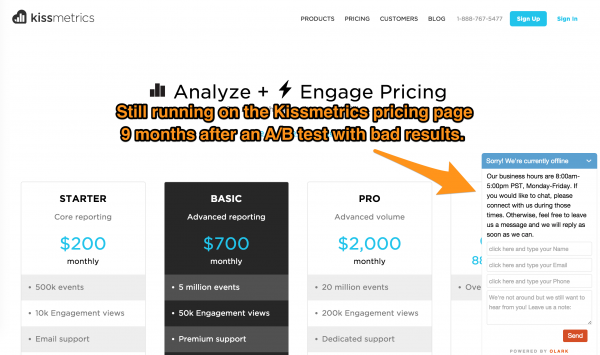

Olark is still live on the Kissmetrics site 9 months after we did the test. If you go to the pricing page, it’s still there:

Why wouldn’t we kill a bad test? Why would we let a bad, risky variant live on?

Internal politics.

Here’s the thing: just because you have data doesn’t mean that decisions get made rationally.

I took these test results to one of our Sales directors at the time and said that I was going to take Olark off the site completely. That caused a bit of a firestorm. Alarms got passed up the Sales chain and I found myself in a meeting with the entire Sales leadership.

I wanted Olark gone. Sales was 100% against me.

Live chat is considered a best practice (or at least it was a best practice at one point). It’s a safe choice for any SaaS leadership team. I have no idea HOW it became a best practice considering the data I found but that’s not the point. There’s plenty of best practices that sound great but actually make things worse.

Here’s what the head of Sales told me: “Salesforce uses live chat so it should work for us too.”

But following tactics from industry leaders is the fastest path to mediocrity for a few reasons:

- They might be testing it themselves to see if it works, you don’t know if it’s still mid-test or a win they’ve decided to keep.

- They might not have tested it, they could be following best practices themselves and have no idea if it actually helps.

- They may have gotten bad data but decided to keep it because of internal politics.

- Even if it does work for them, there’s no guarantee that it’ll work for you. I’ve actually found most tactics to be very situational. There’s a few cases where a tactic helps immensely but most of the time it’s a waste of effort and has no impact.

It’s also difficult to understand how a live chat tool would decrease conversions. Maybe it’s a distraction, maybe not. But when you see good opportunities come in as an SDR rep that help you meet your qualified lead quotas, it’s not easy to separate that anecdotal experience from the data on the entire system.

But none of this mattered. Sales was completely adamant about keeping it.

The ambiguity on customer counts didn’t help either. As long as it was an unknown, arguments could still be made in favor of Olark.

Why didn’t I let the test run longer and get enough data on how it impacted new customer counts? With how close the data was, we would have needed to run the test for several months before getting anywhere close to an answer. Since I had several other tests in my pipeline, I faced serious opportunity costs if I let the test run. Running one test for 3 months means not running 3-4 other tests that have a chance at being major wins.

So I faced a choice. I could have removed Olark if I was stubborn enough. My team had access to the marketing site, Sales didn’t. But standing my ground would start an internal battle between Marketing and Sales. It’d get escalated to our CEO and I’d spend the next couple of weeks arguing in meetings instead of trying to find other wins for the company. Regardless of the final decision, the whole ordeal would fray relationships between the teams. I’d also burn a lot of social capital if I decided to push my decision through. With the decrease in trust, there would be all sorts of long-term costs that would prevent us executing effectively on future projects.

I pushed back and luckily got agreement for not launching it on the Features or Customers pages. But Sales wouldn’t budge on the Pricing page. I chose to let it drop and it lives to this day.

That’s how I launched a variant that decreased conversions.

Should You Use a Live Chat Tool on Your Site?

Could a live chat tool increase the conversions on your site? Possibly. Just because it didn’t work for me doesn’t mean it won’t work for you.

Are there other places that I would place a live chat tool? Maybe a support site or within a product? Certainly. There are plenty of cases where acquisition matters less than helping people as quickly as possible.

Would I use a live chat tool at an early stage startup to collect every possible bit of feedback I could? Regardless of what it did to signups? Most definitely. Any qualitative feedback at this stage is immensely valuable as you iterate to product/market fit. Sacrificing a few signups is well worth the cost of being able to chat will prospects.

If I was trying to increase conversions to signups, activations, and customers, would I launch a live chat tool on a SaaS marketing site without A/B testing it first? Absolutely not. Since this test didn’t go well, I wouldn’t launch a live chat tool without conclusive data proving that it helped conversions.

Olark and the rest of the live chat companies have great products. There’s definitely ways for them to add a ton of value. Getting lots of qualitative feedback at an early stage startup is probably the strongest use case that I see. But if your goal is to increase signups, activations, and customers, I’d be very careful with assuming that a live chat tool will help you.