Growth graphs like this don’t come around too often:

I’ve gotta hand it to Slack, they’re playing the PR game pretty well too. Headline after headline on Techcrunch and Techmeme keep telling us all how crazy the growth is over there. They’ve already built the brand to go with it.

But here’s the thing about rocketships: they either go further than you ever thought possible or they blow up in your face.

It all comes down to momentum. Keep it up and you’ll quickly dominate your market.

But once you start to lose momentum, the rocketship rips itself apart. Competitors catch up, market opportunities slip away, talent starts to leave, and growth stalls. It’s a nasty feedback loop that’ll do irrecoverable damage. Once that rocketship lifts off, you either keep accelerating growth or momentum slips away as you come crashing back down. Grow or die.

There’s no room for mistakes. But when driving growth, there’s 2 forces that will consistently try to bring you crashing back down.

1) The Counter-Intuitive Nature of Growth

At KISSmetrics, I launched a bunch of tests that didn’t make a single bit of sense when you first looked at them. And many of them were our biggest winners.

Here’s a good example. Spend any time learning about conversion optimization and you’ll come across advice telling you to simplify your funnel. Get rid of steps, get rid of form fields, make it easier. Makes sense right? Less effort means more people get to the end. In some cases, this is exactly what happens.

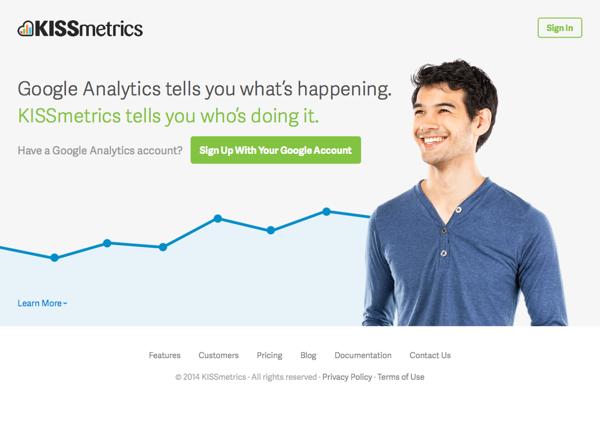

In other cases, you’ll grow faster by adding extra steps. Yup, make it harder to get to the end and more people finish the funnel. This is exactly what happened during one of our homepage tests. We added an entire extra step to our signup flow and instantly bumped our conversions to signups by 59.4%.

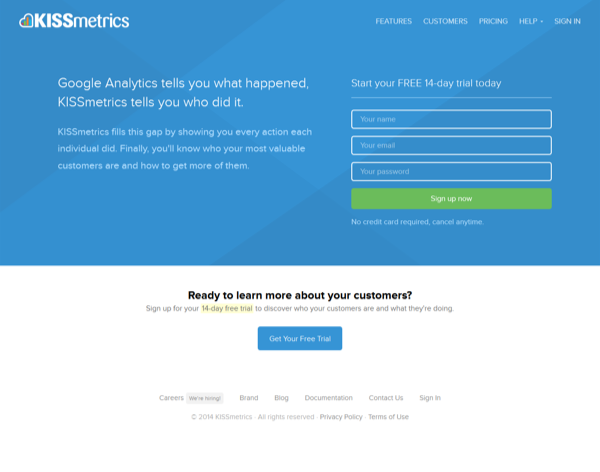

Here’s the control:

Here’s the variant:

In this case, the variant dropped people straight into a Google Oauth flow. But we didn’t get rid of our signup form since we still needed people to fill out the lead info for our Sales team.

Number of steps on the control:

- Homepage

- First part of signup form

- Second part of signup form (appeared as soon as you finished the first 3 fields)

Number of steps on the variant:

- Homepage

- Google account select

- Google oauth verification

- Signup form completion

You could say the minimalist design on that variant helped give us a winner which is true. But we saw this “add extra steps to get more conversions” across multiple tests. Works like magic on homepages, sign up forms, and webinar registrations. It’s one of my go-to growth hacks at this point.

Counter-intuitive results come up so often that it’s pretty difficult to call winners before you launch a test. At KISSmetrics, we had some of the best conversion experts in the industry like Hiten Shah and Neil Patel. Even with this world-class talent, we STILL only found winners 33% of the time. That’s right, the majority of our tests FAILED.

We ran tests that I would have put good money on. And guess what? They didn’t move our metrics even a smidge. The majority of our tests made absolutely zero impact on our growth.

It takes a LOT of testing to find wins like this. So accelerating growth isn’t a straight-forward process. You’ll run into plenty of dead-ends and rabbit holes as you learn which levers truly drive growth for your company.

2) You’ll Get Blind-Sided by False Positives

Fair enough, growth is counter-intuitive. Let’s just A/B test a bunch of stuff, wait for 95% statistical significance, and then launch the winners. Problem solved!

Not so fast…

That 95% statistical significance that you’ve placed so much faith in? It’s got a major flaw.

A/B tests will lead you astray if you’re not careful. In fact, they’re a lot riskier than most people realize and are riddled with false positives. Unless you do it right, your conversions will start bouncing up and down as bad variants get launched accidentally.

Too much variance in the system and too many false positives means you’re putting a magical growth rate at serious risk. Slack wants to get as much risk off the table while still chasing big wins. And the normal approach to statistical significance doesn’t cut it. 95% statistical significance launches too many false positives that will drag down conversions and slow momentum.

Let’s take a step back. 95% statistical significance comes from scientific research and is widely accepted as the standard for determining whether or not two different data sets are just random noise. But here’s what gets missed: 95% statistical significance only works if you’ve done several other key steps ahead of time. First you need to determine the minimum percentage improvement that you want to detect. Like a 10% change for example. THEN you need to calculate the sample size that you need for your experiment. The results don’t mean anything until you hit that minimum sample size.

Want to know how many acquisition folks calculate the sample size based on the minimum difference in conversions that they want to detect? Zero. I’ve never heard of a single person doing this. Let me know if you do, I’ll give you a gold star.

But I don’t really blame anyone for not doing all this extra work. It’s a pain. There’s already a ton of steps needed in order to launch any given A/B test. Hypothesis prioritization, estimating impact on your funnel, copy, wireframes, design, front-end and back-end engineering, tracking, making the final call, and documentation. No one’s particularly excited about adding a bunch of hard-core statical steps to the workflow. This also bumps the required sample sizes for conversions into the thousands. Probably not a major problem for Slack at this point but it will dramatically slow the number of tests that they can launch. Finding those big wins is a quantity game. If you want higher conversions and faster viral loops, it’s all about finding ways to run more tests.

When you’re in Slack’s position, the absolute last thing you want to do is expose yourself to any unnecessary variance in your funnel and viral loops. Every single change needs to accelerate the funnel like clockwork. There’s too much at stake if any momentum is lost at this point. So is there another option other than doing all that heavy duty stats work for each A/B test? Yes there is.

The Key to Keeping Rocketships Flying

Right now, the team at Slack needs to be focusing on one thing: how not to lose.

Co-founder Stewart Butterfield mentioned that he’s not sure where the growth is coming from. This is a dangerous spot to be in. As they start to dive into their funnel, there’s a serious risk of launching bad winners from false positives. They’ll need every last bit of momentum if they want to avoid plateauing early.

As it turns out, there is a growth strategy that takes these A/B testing risks off the table. It’s disciplined, it’s methodical, and it finds the big wins without exposing you to the normal volatility of A/B testing. I used it at KISSmetrics to grow our monthly signups by over 267% in one year.

Here’s the key: bump your A/B decision requirement to 99% statistical significance. Don’t launch a variant unless you hit 99%. If you’re at 98.9% or less, keep the control. And run everything you can through an A/B test.

Dead serious, the control reigns unless you hit 99% statistical significance. You’ll be able to keep chasing counter-intuitive big wins while protecting your momentum.

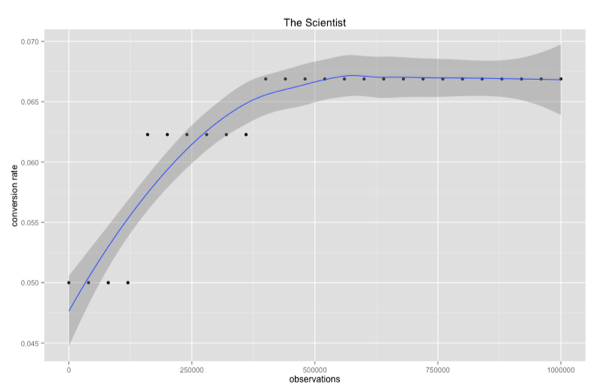

At KISSmetrics, we actually did a bunch of Monte Carlo simulations to compare different A/B Testing strategies over time.

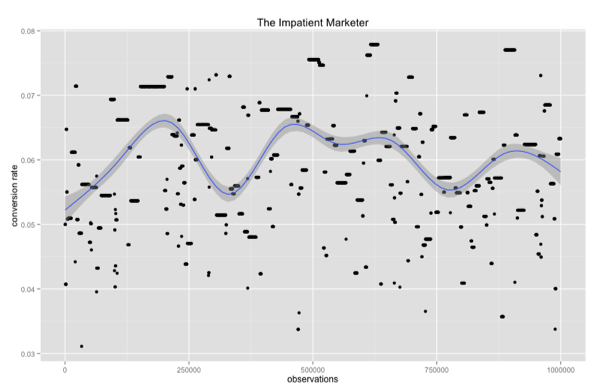

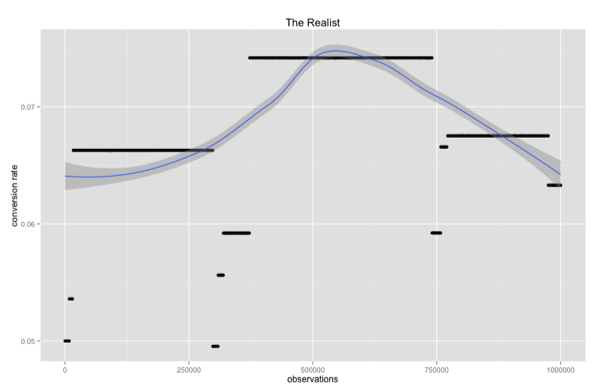

I’ve posted the results from 3 different strategies below. Basically, the more area under the curve means the more conversions you earned. Each dot represents a test that looked like a winner. You’ll notice that many dots actually bring the conversions down. This comes from false positives and not being rigorous enough from your A/B testing.

Here’s what you get if you use the scientific researcher strategy:

Not much variance in this system. Winners are almost always real winners.

Here’s your regular sloppy 95% statistical significance strategy that makes changes as early as 500 people in the test:

Conversions bounce around quite a bit. False wins come up often which means that if you sit on particular variation for long, it will drag those conversions down and slow growth. There goes your momentum.

Now let’s look at the 99% strategy that waits for at least 2000 people in the test for a decent sample size:

Still a chance to pick up false winners here but a lot less variance than 95%. Let’s quantify all 3 strategies real quick by calculating the area under the curve. Then we’ll be able to compare them instead of just eye-balling the simulations.

- Statistical researcher = 67759

- 95% statistical significance = 60532

- 99% statistical significance = 67896

Bottom line: the 99% strategy performs just as well as the scientific researcher and a lot better than the sloppy 95%. It’s also easy enough for any team to implement without having to do the extra stats work.

The 99% rule is my main A/B testing rule but here are all of them:

- Control stands unless the variant hits a lift at 99% statistical significance.

- Run the test for at least a week to get a full business cycle.

- Get 2,000 people through the test so you have at least a halfway decent sample size.

- If the test looks like a loser or has an expected lift of less than 10%, kill it and move on to the next test. Only chase single digit wins if you have a funnel with crazy volume.

I used these rules to double and triple multiple steps of the KISSmetrics funnel. They reduce the risk of damaging the funnel to the bare minimum, accelerate the learning of your team, and uncover the biggest wins. That’s how you keep your growth momentum.

Embedding A/B Tests Into the Slack Culture

I can give you the rules for how to run a growth program. But you know what? It won’t get you very far unless you instill A/B tests into the fabric of the company. The Slack team needs to pulse with A/B testing. Even the recruiters and support folks need to get excited about this stuff.

This is actually where I failed at KISSmetrics. Our Growth and Marketing teams understood A/B testing and our entire philosophy behind it. We cranked day in and day out. It was our magic sauce.

But the rest of the team? Did Sales or Support ever get it? Nope. Which meant I spent too much time fighting for the methodology instead of working on new tests. If I had spent more time bringing other teams into the fold from the beginning, who knows how much further we could have gone.

If I was at Slack, one of my main priorities would be to instill A/B testing into every single person at the company. Here’s a few ideas on how I’d pull that off:

- Before each test, I’d show the entire company what we’re about to test. Then have everyone vote or bet on the winners. Get the whole company to put some skin in the game. Everyone will get a feel for how to accelerate growth consistently.

- Weekly A/B testing review. Make sure at least one person from each team is there. Go through all the active A/B tests, current results, which one’s finished, final decisions, and what you learned from them. The real magic of A/B testing comes from what you’re learning on each test so spread these lessons far and wide.

- Do monthly A/B testing growth talks internally. Include the rules for testing, why you A/B test, the biggest wins, and have people predict old tests so they get a feel for how hard it is to predict ahead of time. Get all new hires into these. Very few people have been through the A/B test grind, you need to get everyone up to speed quickly.

- Monthly brainstorm and review of all the current testing ideas in the pipe. Invite the whole company to these things. Always remember how hard it is to predict the big winners ahead of time, you want testing ideas coming at you from as many sources as possible.

Keep Driving The Momentum of That Slack Rocketship

I’m really hoping the team at Slack has already found ways to avoid all the pitfalls above. They’ve got something magical and it would be a shame to lose it.

To the women and gents at Slack:

- Follow the data.

- Get the launch tempo as high as possible for growth, you’ll need to run through an awful lots of ideas before you find the ones that truly make a difference.

- Only make changes at 99% statistical significance.

- Spread the A/B testing Koolaid far and wide.

- Don’t settle. You’ve got the magic, do something amazing with it.